Writing Parquet Records from Java

- Thursday September 26 2019

- java serialization

Recently, I found myself needing to write out some records in Parquet format. I've been aware of the existence of Parquet for a while, but never really had the opportunity to use it for anything. I wanted to do this from Java and I was not using any application framework. My problem was very simple. I had a small number of records that easily fit in application memory in a Java ArrayList, which needed to be written out into a Parquet file. This was the opposite of a "Big Data" problem.

Even for small problems, there are advantages to using Parquet. For example

- Parquet records are written with a definite schema

- Parquet is able to read by many other applications in the Apache ecosystem

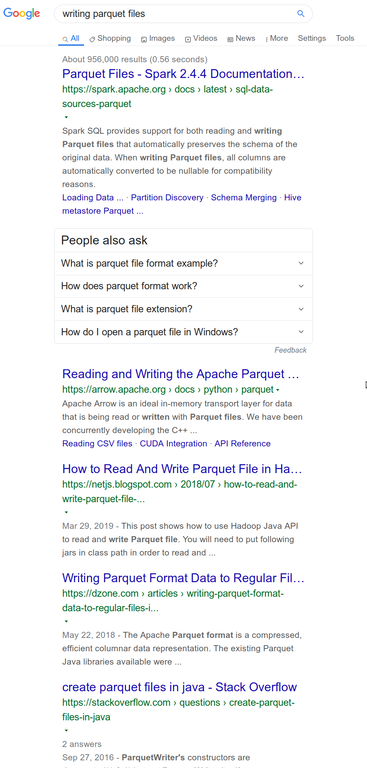

Since I had no experience with Parquet, I turned to Google to try and find some example material to use as a reference. This is what Google showed

I had thought this would be trivial, with countless examples. Instead what I found on Google was a mix of different libraries and quite a few examples of how to write out Parquet records from frameworks such as Apache Spark. Using things like Apache Spark certainly has its appropriate applications, but in my case I didn't want to add an application framework just so I could write out a small number of records in Parquet.

After some amount of searching, I arrived at the conclusion there was no simple example showing how to do this. There are in fact many examples on Stack Overflow showing the basic idea which I learned from. But most of these are fragments and not working examples. I wanted to come up with the smallest amount of code that would still work. So I dived into the material I could find and identified the following classes

org.apache.parquet.avro.hadoop.ParquetWriter- this is the core class that can write data to a file in Parquet formatorg.apache.parquet.schema.MessageType- instances of this class define the format of what is written in Parquetorg.apache.parquet.hadoop.metadata.CompressionCodecName- this enumeration identifies the compression format used when writing Parquet

So let's say you have a very simple set of data, shown here in JSON format

[

{"user_id": 1, "rank": 3},

{"user_id": 2, "rank": 0},

{"user_id": 3, "rank": 100}

]

These objects all have the same schema. I am reasonably certain that it is possible to assemble the above classes to write out simple records like my example dataset. However, after looking into doing that it quickly became apparent that it is simpler to just define an Apache Avro schema and then utilize the generated objects to write those objects out in Parquet format.

You need some additional classes, which tie together Avro & Parquet

org.apache.parquet.avro.AvroSchemaConverterorg.apache.parquet.avro.AvroParquetWriter

So, first we must define a simple Avro schema to capture the objects from the example

{

"type": "record",

"namespace": "com.hydrogen18.examples",

"name": "UserRank",

"fields": [

{ "name": "userId", "type": "int" },

{ "name": "rank", "type": "int" }

]

}

To learn more about the types of fields in Apache Avro, you can consult this document. This schema can then be used to generate a Java class. Generating a Java class from the Avro schema is done with Avro Tools and is explained in this document. I did this by just integrating the generation step into maven via the pom.xml file. The following class shows how to instantiate the generated class and write them out in Parquet format.

package com.hydrogen18.examples; // Generic Avro dependencies import org.apache.avro.Schema; // Hadoop stuff import org.apache.hadoop.fs.Path; // Generic Parquet dependencies import org.apache.parquet.schema.MessageType; import org.apache.parquet.hadoop.metadata.CompressionCodecName; import org.apache.parquet.hadoop.ParquetWriter; // Avro->Parquet dependencies import org.apache.parquet.avro.AvroSchemaConverter; import org.apache.parquet.avro.AvroParquetWriter; public final class Main { public static void main(String[] args){ Schema avroSchema = UserRank.getClassSchema(); MessageType parquetSchema = new AvroSchemaConverter().convert(avroSchema); UserRank dataToWrite[] = new UserRank[]{ new UserRank(1, 3), new UserRank(2, 0), new UserRank(3, 100) }; Path filePath = new Path("./example.parquet"); int blockSize = 1024; int pageSize = 65535; try( AvroParquetWriter parquetWriter = new AvroParquetWriter( filePath, avroSchema, CompressionCodecName.SNAPPY, blockSize, pageSize) ){ for(UserRank obj : dataToWrite){ parquetWriter.write(obj); } }catch(java.io.IOException e){ System.out.println(String.format("Error writing parquet file %s", e.getMessage())); e.printStackTrace(); } } }

How this works is the generated class from the Avro schema has a .getClassSchema() method that returns the information about the type. This is converted into the type needed by calling new AvroSchemaConverter().convert(). This gives an object that is a MessageType, which is a type defined by the Apache Parquet library. Interestingly enough, the AvroParquetWriter class actually uses both of these objects when being constructed. At this point, you just call .write() on the instance of AvroParquetWriter and it writes the object to the file.

You can find a complete working example on github here or download it below. Once you have the example project, you'll need Maven & Java installed. The following commands compile and run the example

mvn install- build the examplejava -jar target/writing-parquet-example-0.1-jar-with-dependencies.jar- run the example

The output is written into a file called example.parquet. Hopefully this example is useful to others who need to write out Parquet files without depending on frameworks.