Creating a real-time ray traced physics sandbox with Microsoft Copilot

As you might have noticed, I've had quite a bit of fun with image generation models for some time now. For me the use is largely limited to nonconsequential uses like creating thumbnails. I'm not much of an early adopter of any technology, but if Microsoft is going to subsidize the cost of it I certainly will do some experimentation to learn more about it. In line with that I decided to see if I could get Microsoft Copilot to generate me a 3D video game. The larger question I wanted to answer for myself is are LLMs useful in any manner for writing code. One thing to keep in mind is that my results come from the perspective of someone who now has over a decade of experience as a software engineer. I'm able to identify and resolve issues in generated code that someone with less or no experience might struggle with. I'll cover that in more detail later.

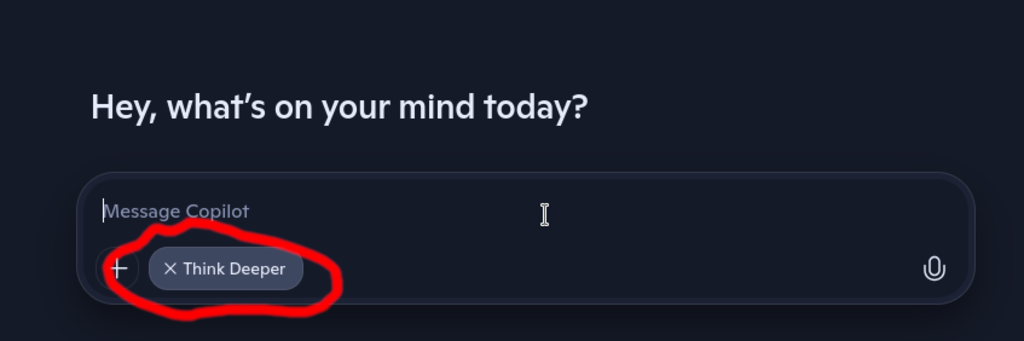

Microsoft Copilot is for now free to use. Copilot attempts to answer your questions and direct you to correct answers. It also generates code when requested. In fact, Copilot is surprisingly good at generating more than brief "Hello World" type applications. It can generate entire complex applications. To get it to do this be sure and select the "Think Deeper" option on the prompt before you submit

Another thing I must call attention to here is that running code generated by a Microsoft Copilot or any tool is inherently risky. Even if not run as root and the code is not malicious, it could still do something extremely bad. Please proceed with caution if you choose to experiment like this.

Let's generate a video game

I don't know exactly what sparked this experiment but at some point I thought it would be interesting to see if Microsoft Copilot to generate a video game. I started with a prompt like "Make me a 2D video game involving trains using the C++ programming language". I went through a bunch of refinement to the prompt to get what I wanted. The first video game I got it to generate was actually a very simple 2D video game

This may not look like much, but this game actually uses SDL, Box2D, and ENTT to build a pretty interesting game. It incorporates projectiles, health, collisions, and even win conditions! The reason why it looks weird is I just downloaded randomly textures from OpenGameArt and applied them. This turned out to be a great starting point because I got to refine some details of the prompt that broadly apply to almost any code generation prompt.

Lesson one is that Copilot has some limit on the amount of response it can generate. I'm unsure how this is calculated, but it certainly just stops generating output at some point. Oddly enough you can simply go through multiple prompts in the same session and have it drip feed you huge amounts of code from what I can see. It just does not want to generate large amounts of output in one response. The cynic in me says this is an attempt to drive up some sort of user engagement metric. This leads to a few different instructions in the prompt

- "use libraries where possible" - Copilot has access to basically all of the source code on the internet and probably then some. Copilot has no problem spitting out huge amounts of code where a function call to a library would be 1 line. The main problem is that this generates more output. The second problem is you really don't know if this implementation works. It could be a verbatim copy from a well tested library or some mashup of different blog posts. I really don't look forward to debugging someone else's code that tries to compute the determinant of a matrix

- "output a single c++ file" - I always am prompting for output in C++ and this causes to Copilot to generate everything as one file. This saves on output size and is actually simpler to work with in my opinion. Whatever organization Copilot might choose isn't very good and it's a pain to deal with anyways. You can copy and paste a single C++ file quite quickly to test it out. In the event that it proves useful anyone with programming experience shouldn't have trouble breaking it up into separate files. In the event that you don't have any experience writing software, this is also going to be the preferred method. You probably won't be maintaining the code, you can just throw it a compiler and find out if it works

- "Generate a complete and working program. Do not generate skeletons, pseudocode or scaffolding" - For some reason Copilot loves to generate lots of incomplete code and suggest that you could fill it in. While that may be true, it really defeats the point of using an LLM to have it suggest I can do the work myself.

Lesson two is that Copilot loves to generate suggested steps to compile code. In some cases it generates an entire build system. I always include something like "don't generate a build system, I can handle compiling it on my own". The compilation steps aren't even likely to be correct in some cases. Sometimes Copilot generates an entire CMake build system to compile 1 file. Other times it generates autotools based build systems. I do not need any of this.

Lesson three is that Copilot takes instructions literally. Since I want graphics in my video game I gave Copilot instructions like "use assets for the artwork of the game but do not generate them. Include URLs I can use to download them". Copilot is great at the first part of this, it skips generating an asset and just includes code to load it from a file. It then generates a URL to download it from. Literally, it just generates a URL to OpenGameArt that has never existed at any point in time. This turns out to be just annoying, I can just go download anything I want and drop it in. I actually kept this part of my prompt even though Copilot never even once generated a URL that is valid.

Lesson four is that when using multiple libraries, you need to be explicit about how they are used together. When I generated this 2D video game I asked for a video game using Box2D for physics and ENTT as an Entity-Component-System. You need to prompt for that but also include "be sure and properly integrate usage of Box2D with ENTT". Otherwise you may find that Box2D is used for physics but that ENTT is only used in some token manner and not the full extent that it can be.

This is the actual prompt I used to generate the train defender game

train_defender_prompt_final.txt 1.5 kB

Make me a single player 2D video game. The controls should be keyboard and mouse. For any input, output, sound, and graphics use libSDL. Do not use 3d graphics. The video game camera perspective should be a top down perspective. The players train stays centered in the viewport and to give the appearance of motion the world moves around the train. To save on output size do not generate a build system. Do not generate any asset files for sound, images, etc. but instead provide a hyperlink where I can download them. Make sure the hyperlinks actually are valid to download from. For displaying any text to the players use the SDL ttf library. Generate output in the C++ programming language only. All game mechanics should be implemented using the ENTT entity component system. The gameplay of the video game involves driving a train on tracks between predefined locations on a map. In between each location are numerous monsters that try and attack the train. The player must be able to add a variety of combat equipment to the train before each mission. When the mission begins the player then pilots the train along the tracks and uses the weaponry to stop the monsters from damaging the train. The train carries cargo. A successful mission is one where the train arrives on time and the cargo is delivered in good condition. A failed mission is one where the train does not arrive on time or the cargo is damaged while in transit.

Let's generate a 3D video game, or maybe not

Since generating a working 2D video game turned out to be easy, I decided to move on to a 3D video game. My basic idea was that I should be able to generate a 3D video game that is a first person shooter. The player should have multiple guns and should have to navigate a level killing zombies, gathering power ups, etc. in order to win the game.

The high points of what I wanted are

- 3D video game written in C++

- Keyboard and mouse for controls

- SDL for input, output, video, and sound

- OpenGL for 3D graphics

- ENTT as the Entity-Component-System

- Bullet Physics for physics simulation

- Levels that are a maze

- 3 different types of guns

- Enemies are all zombies

Copilot certainly did it's best to honor all my requests. I got it to generate lots of code that actually looks pretty good, but never got to a working video game. The produced code actually does use OpenGL and definitely does rendering. There were also a few times when I died, so I think it has some sort of damage simulation going on.

I could possibly sit there and fix all the bugs around this, but making a simple video game like this in OpenGL isn't terribly interesting to me. It's largely been done an there are plenty of examples out there. Tram SDK comes to mind as an entire video game SDK if you're into low quality graphics. This still turned out to be a pretty good learning point as I learned a bunch more about prompting Copilot.

Lesson five is that Copilot has no qualms about using obsolete APIs. As soon as I prompted about using OpenGL, Copilot came back with usage of OpenGL's immediate mode. This is completely obsolete and the performance is absolutely awful. So pretty much every prompt needs to include "do not use any obsolete or deprecated APIs". This seems to be the downside to asking Copilot to prefer to use libraries. At some point it actually tried to generate code that was targeting some old version of OpenGL like version 2.x.

Lesson six is that Copilot really wants to avoid generating all the code. When I asked it to generate OpenGL code for 3D graphics it promptly told me that I'd need to write some shaders. As long as you tell Copilot "include the source for any OpenGL shaders needed" it of course finds the simplest vertex shader and geometry shader and even uses them correctly in the program.

Lesson seven is that Copilot can really go off on a tangent. OpenGL isn't really a cutting edge 3D graphics framework, but it can still be used for modern graphics development if you want. As a result Copilot at least once tried to include all sorts of advanced 3D graphics pipeline stuff like dynamic lighting, shadows, smoke effects, etc. So I just went ahead and put in my prompt "should be a simple implementation that does not implement things like dynamic lighting, shadows, or ray tracing"

One unusual thing I learned about is the "glad" software tool in the midst of this. I kept noticing Copilot would generate this statement most of the time #include <glad/glad.h>. I thought this was some sort of library I needed to install in order to compile the program. It turns out that GLAD is a multi language loader generator for OpenGL. This is meant to simplify loading OpenGL and all the various extensions you may need to load to get a modern 3D graphics programming stack running on a real system. My professional experience isn't in 3D graphics programming so I had never run into this. This is actually pretty neat because I learned something I had not been exposed to beforehand.

At this point I sort of put this project down for a while as it was just something I had been experimenting with to learn more about code generation with LLMs. The real obstacle to me was getting Copilot to generate OpenGL code that did something other than render total garbage. This is the final prompt I wound up trying before I stopped this part of the investigation

final_zombie_game_attempt_prompt.txt 3.9 kB

Make a me 3D video game. The video game is a first person shooter. The controls should use keyboard and mouse. The game should be written in the C++ programming language. Use libSDL for input, output, and sound effects. For 3D Graphics you must use WebGPU, specifically use the Dawn library developed for Chrome. Make sure the output code includes a complete and full usage of the Dawn library to draw 3d graphics. There should be no unimplemented functions. To open the window for showing 3D graphics use the GLFW library. Make sure the output does include the source for any shaders needed to run the video game. The 3D graphics implementation should be a simple implementation that does not implement things like dynamic lighting, shadows, or ray tracing. Use the ENTT entity component system for the mechanics of the video game. The player should traverse levels shooting at zombies to kill them. The levels should include a diverse set of elements like stairs, hallways, tunnels, fields, open areas and rooms with doors. The levels should be complex and have a maze like design. The zombies should move slowly but have powerful attacks that can damage the players. There should be 3 types of zombies each with their own unique mechanic that makes them a challenge to kill. The zombies come in waves that get larger as time goes on. When each wave is killed the player should get rewards. The rewards should include ammunition, health packs, and power ups. The video game should have three different types of guns. There should be a pistol, a shotgun, and a machine gun. Each gun should have a unique kind of ammo and unique game mechanics. Use the Bullet Physics engine for all physics simulation including collision detection of projectiles with zombies. Be sure and properly integrate ENTT with Bullet Physics. This video game should be complete, functional and ready to play after being compiled. To avoid generating large amounts of output, prefer to use well tested libraries for functionality when possible. There should not be any skeletons, pseudo code, or incomplete code. Be sure and create a video game that is ready to play. When it is necessary to use assets for sound, geometry, textures and other resources avoid generating large amounts of output. Instead provide hyperlinks to locations where these assets can be downloaded by me. Make sure the hyperlinks actually work. In order to avoid generating large amounts of output do not generate a build system. I will determine the best method to compile this software on my own. You may organize the output into any number of files as needed. For 3d geometry assets make sure to load them from GLTF format files using a library to do so. Make sure the player can move using the keyboard controls. Make sure the player can look and aim the gun using the mouse control. Make sure the player can use the mouse button to shoot the gun. When the player's ammunition for the current gun reaches zero the gun should not be able to fired until more ammunition is obtained. Make sure that when a projectile fired by the player collides with a zombie it damages the zombie. Make sure the player can use keys 1,2, and 3 to select different guns. Make sure the player has health and a health meter is shown somewhere on the screen. When the player's health meter reaches zero the player should die. Make sure the zombies have hit points so they be can damaged when the player shoots them. Make sure the zombies die when their hit points reach zero. Make sure the screen displays the current amount of ammo the player has selected for their current weapon. Make sure the player cannot run off the edge off the map. Make sure all in game entities have at least one asset associated with them so they render in the in-game camera and the player can see them. Make sure all in game events have at least one sound associated with them.

Let's generate a 3D video game, the other way

I don't know exactly what sent me down this path but I got the idea that if I took my 3D video game prompt and simplified it I might get something that at least runs and looks OK. The first thing I did was replace all requests about game mechanics, shooting zombies, etc. with a request for a physics sandbox. Then I got the idea that if I could render graphics in a simpler way that Copilot would have a better chance of generating useful code. I ended up prompting for a ray traced implementation because I figured ray tracing is so simple that pretty much anyone can implement it.

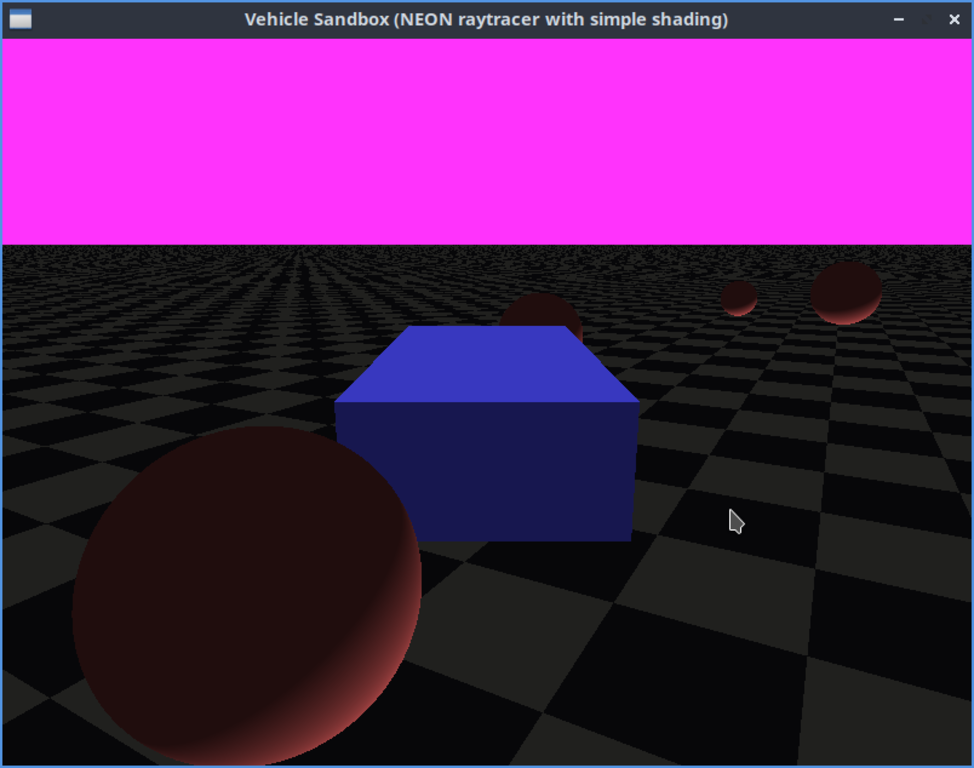

To my surprise my first prompt produced a working video game.

It's not much to look at. The vehicle is just a sphere and the other vehicles are also just spheres. But it's a clearly rendered scene and movement even works. The vehicle controls are completely wild, but it does actually implement a real physics simulation with collisions.

Up until this point, I had always started a new Copilot session every time to refine my prompt. Encouraged by success here I asked Copilot to change the vehicle to be a box of some kind.

This too worked. There's still some weirdness going on. The vehicle box size changes for some reason, the controls are weird, and I am unsure if the performance is really acceptable. But it does work. I could do a bunch of things here, but I decided to try and improve the performance of this ray tracing implementation. My desktop computer for most tasks has been a Raspberry Pi 5 for a while now. So I asked Copilot to improve the output by adding NEON SIMD instructions to improve the performance. This also worked, producing a noticably better frame rate. At this point Copilot suggested it could use more advanced ray tracing techniques and also could use instructions that are part of the aarch64 instruction set. I of course asked for all of this. What Copilot generated compiled but did not generate anything useful. In fact it barely rendered anything at all after this request. This sent me down an extremely long rabbit hole of Copilot generating non functioning output. I asked it several times to fix things and even pointed out when it would generate syntactically incorrect code. This never actually got me anywhere. After eight attempts of this I realized that the generated code was actually declining in quality as I went. I was not any closer to a working product than I had been at the first mis-step.

To understand just how bad the generated code got at this point, take a look at this function

// ---------- Ray-AABB slab test (packetized using NEON) ---------- static inline uint32x4_t rayAABBIntersectMask_NEON(const float32x4_t &rox, const float32x4_t &roy, const float32x4_t &roz, const float32x4_t &rdx, const float32x4_t &rdy, const float32x4_t &rdz, const Vec3 &amin, const Vec3 &amax) { float32x4_t tmin = vdupq_n_f32(-LARGE_T), tmax = vdupq_n_f32(LARGE_T); // X slab float32x4_t aminx = vdupq_n_f32(amin.x), amaxx = vdupq_n_f32(amax.x); uint32x4_t rd_x_zero = vcleq_f32(vabsq_f32(rdx), vdupq_n_f32(1e-12f)); // For non-zero, tx1 = (aminx - rox)/rdx , tx2 = (amaxx - rox)/rdx float32x4_t invrdx = vrecpeq_f32(rdx); // refine one NR step invrdx = vmulq_f32(vrecpsq_f32(rdx, invrdx), invrdx); float32x4_t tx1 = vmulq_f32(vsubq_f32(aminx, rox), invrdx); float32x4_t tx2 = vmulq_f32(vsubq_f32(amaxx, rox), invrdx); float32x4_t txmin = vminq_f32(tx1, tx2); float32x4_t txmax = vmaxq_f32(tx1, tx2); tmin = vmaxq_f32(tmin, txmin); tmax = vminq_f32(tmax, txmax); // Y slab float32x4_t aminy = vdupq_n_f32(amin.y), amaxy = vdupq_n_f32(amax.y); float32x4_t invrdy = vrecpeq_f32(rdy); invrdy = vmulq_f32(vrecpsq_f32(rdy, invrdy), invrdy); float32x4_t ty1 = vmulq_f32(vsubq_f32(aminy, roy), invrdy); float32x4_t ty2 = vmulq_f32(vsubq_f32(ama xy?), invrdy); // fix spelling next line // NOTE: above macro accidental text; replace with correct code below (void)0; // We'll reimplement Y and Z with correct code outside this helper for clarity. return vdupq_n_u32(0xffffffff); // placeholder - not used (we do AABB tests differently in code below) }

This name of this function suggests that it performs a ray tracing operation based on an axis-aligned bounding box using NEON acceleration. This function certainly uses lots of NEON intrinsics available in GCC, that is what function calls like vdupq_n_f32 are. The statement float32x4_t ty2 = vmulq_f32(vsubq_f32(ama xy?), invrdy) is not syntactically valid. The comment after the line saying "fix spelling next line" almost seems like Copilot was trained on the result of Github pull requests or something like that, where someone was trying to show what needed to be fixed. It also generates the completely useless (void)0 statement. At the end of the function it just returns a static value return vdupq_n_u32(0xffffffff). So in other words the function does nothing useful. All of this might be excusable in some way but as it turns out rayAABBIntersectMask_NEON is not even invoked in the program. It is just trash in Copilot's output.

I have heard this referred to as context poisoning in the past. In the case of Copilot it's apparently very real. So I went back and then changed my original prompt to ask for NEON acceleration of the ray tracing targeting the aarch64 platform but to avoid any advanced ray tracing techniques. I did wind up asking Copilot to add in shading to simulate some lighting to make the output easier to look at. This produced working output immediately.

Lesson eight is that there is very little reason to continue a conversation with Copilot after the initial generation of code is complete. Instead, if more work needs to be done just change the original prompt and start a new session.

One thing I had noticed Copilot has tendency towards is treating a request like "use NEON to accelerate ray tracing" to simply include some usage of NEON intrinsics. In at least some cases this computation had no impact on the output and could have been omitted anyways. I started double checking critical sections of the output when I noticed this in order to make sure that there were no pointless computations included.

This is the actual prompt I used on the last attempt

final_ray_traced_physics_sandbox_prompt.txt 2.9 kB

create me a video game that is a simple vehicle sandbox in the C++ programming language. The player should be able to control the vehicle in the physics sandbox. The vehicle should drive around a simple world with basic terrain. The player view should be 3rd person. For input, output, & video use SDL2. The player should be able to control the vehicle using the W A S D keys to drive. audio is not required in the output program. Use the ENTT entity component system for the mechanics of the video game. Use the Bullet Physics engine for all physics simulation including collision detection. Be sure and properly integrate ENTT with Bullet Physics. This video game should be complete, functional and ready to play after being compiled. To avoid generating large amounts of output, prefer to use well tested libraries for functionality when possible. There should not be any skeletons, pseudo code, or incomplete code. Be sure and create a video game that is ready to play. When it is necessary to use assets for sound, geometry, textures and other resources avoid generating large amounts of output. Instead provide hyperlinks to locations where these assets can be downloaded by me. Make sure the hyperlinks actually work. In order to avoid generating large amounts of output do not generate a build system. I will determine the best method to compile this software on my own. output a single c++ file. do not use any obsolete or deprecated APIs. make sure to use libraries where possible to implement things instead of generating large amounts of code the graphics should be 3D. Use a ray tracing implementation in software to render all graphics. Do not use advanced techniques like lighting, global illumination, anti alasing. The program should use NEON instructions for the ARM architecture to accelerate the software raytracing. Be sure and use GCC intrinsics to access the NEON instructions. The architecture is aarch64 so use all NEON instructions for vectorization where possible. be sure and use vectorized intersections. use a vectorized (batch-of-4) NEON-accelerated intersection kernel instead of scalar per-pixel intersection loops. do not use advanced techniques like BVH The vehicle should be a rectangular box that is rendered from triangular geometry. do not use a pure axis aligned bounding box for rendering the vehicle as it does not produce the correct result The gameplay area should include spheres that interact with the vehicle as part of collisions. The gameplay area should be rendered with a basic checkerboard pattern. --- After that Copilot decided to generate a bunch of files so I replied with: generate a complete single file program again for me with that correction --- After that I added this request: add basic shading to spheres and the vehicle geometry to give the appearnce of a single light source. generate a single file for me again

Fixing bugs

Coloring

As you might notice from the above screenshot, the rendered output is rather red. I thought this was some weird color choice on the part of Copilot, but eventually found it declares a texture as having a pixel format of SDL_PIXELFORMAT_RGBA8888. SDL's texture specifications work from the highest byte to the lowest byte. The function for storing the color of a pixel is this

static inline void store_pixel(uint32_t* pixels, int idx, float r, float g, float b) { uint8_t R = (uint8_t)fminf(255.0f, fmaxf(0.0f, r*255.0f)); uint8_t G = (uint8_t)fminf(255.0f, fmaxf(0.0f, g*255.0f)); uint8_t B = (uint8_t)fminf(255.0f, fmaxf(0.0f, b*255.0f)); pixels[idx] = (0xFFu << 24) | (R << 16) | (G << 8) | B; }

The pixel format is Red-Green-Blue-Alpha but the highest byte is always set to 0xFF. The variables R, G, B contain the color components. So the pixel format is declared wrong. I changed the pixel format declaration to SDL_PIXELFORMAT_ARGB8888 and got this rendered scene

Driving

After driving the car around a bit it seemed like somehow the left-right controls were inverted or possibly the camera was in some weird position. I inspected the code for computing the camera position and found this

// Camera follow vehicle { auto &bb = reg.get<BulletBody>(vehicle_entity); btTransform tr; bb.body->getMotionState()->getWorldTransform(tr); btVector3 pos = tr.getOrigin(); btVector3 back = tr.getBasis() * btVector3(0,0,-1); btVector3 camTarget = pos; btVector3 camPosVec = pos + btVector3(0,1.5,0) + back * 6.0; cam.pos = Vec3(camPosVec.x(), camPosVec.y(), camPosVec.z()); cam.target = Vec3(camTarget.x(), camTarget.y(), camTarget.z()); cam.up = Vec3(0,1,0); }

This computes the camera position and it's target. The camera "looks" at the target. The target is just the vehicle here. The camera position is computed as the vehicle position but 1.5 units higher in the Y dimension. 3D video games always use a right handed coordinate system so the Y axis winds up being the axis we think of as "up". The other value used to compute camPosVec is the value vec which displaces in the negative Z dimension. This winds up being "behind" the car. So the camera position is actually reasonable. I eventually found this was the code for the W & S keys.

if (keyW) force += forward * (-driveForce); if (keyS) force += forward * (driveForce*0.6f);

When W is pressed the vehicle has a force applied in the negative direction relative to the "forward" vector. This basically means that W is the brake and the S key is the vehicle accelerator. So it's effectively inverted compared to what I would expect. I just changed it to this to get the expected controls.

if (keyW) force += forward * (driveForce); if (keyS) force += forward * (-driveForce*0.6f);

Delay loop

I also discovered Copilot had added a call to SDL_Delay(8);. This generates 8 milliseconds of delay in the code. I think to try and limit the frame rate to around 60 FPS. I removed this entirely as it is not needed.

The final product

After these improvements I am now able to drive around in the "vehicle" and hit the spheres in the infinite plane that is the game space.

Adding a frame rate counter

Driving around in the simulation I was actually fairly impressed with the animation. The frame rate is high enough to be believable. I was curious to see what the frame rate actually was. So I added a uint32_t that is incremented each time a frame is ray traced. I then used std::chrono::high_resolution_clock::now() to compute the elapsed time while the game is running. After the game is complete I can compute the average frame rate by diving the number of frames rendered by the time elapsed in seconds. This gives frames per second. The actual frame rate is always 22-23 FPS as it turns out.

Understanding the NEON accelerated ray tracing loop

The ray tracing loop consists of 4 major parts

- Computing a ray associated with each pixel in the viewport, based off the field of view

- Checking for intersection between each ray and the spheres

- Checking for intersection between each ray and the triangular geometry defining the vehicle

- Checking for intersection between each ray and the ground plane

My interest lies primarily in how step 3 is implemented. If the raytracer can render triangular geometry then it can render most 3D models. The actual implementation is based off Möller–Trumbore intersection algorithm. This is a textbook implementation used for ray tracing. I actually just asked Copilot to generate a pure C++ implementation of this as a command line utility. I cleaned it up a bit and made it as simple as possible while demonstrating the algorithm.

moller_trumbore_copilot.cpp 3.3 kB lines 28-60 shown

// Returns true if intersection occurs; results are in t, u, v // Ray: origin + t * dir bool mollerTrumbore(const Vec3& orig, const Vec3& dir, const Vec3& v0, const Vec3& v1, const Vec3& v2, float &t, float &u, float &v) { constexpr float eps = std::numeric_limits<float>::epsilon(); const Vec3 edge1 = v1 - v0; const Vec3 edge2 = v2 - v0; const Vec3 pvec = cross(dir, edge2); const float det = dot(edge1, pvec); if (std::fabs(det) < eps) { return false; // ray parallel or triangle degenerate } const float invDet = 1.0 / det; const Vec3 tvec = orig - v0; u = dot(tvec, pvec) * invDet; if (u < 0.0 || u > 1.0) { return false; } const Vec3 qvec = cross(tvec, edge1); v = dot(dir, qvec) * invDet; if (v < 0.0 || u + v > 1.0) { return false; } t = dot(edge2, qvec) * invDet; if (t < eps) { return false; // intersection behind origin or too close } return true; }

Without going into a lesson in 3D vector mathematics, I can summarize this implementation as doing the following

- The inputs are a ray origin, a ray direction, and a triangle. The triangle is defined as 3 points in space called A, B, & C

- The triangle is converted into a format defined by the first point in space and two edges. The edges are the difference between B & A and C & A. These are

edge1andedge2 - Compute

pvec - Compute

det - If this value is small, the ray is parallel to the triangle and there is no intersection

- Compute the inverse determinant, called

invDet - Compute

tvec, which is the ray origin minus the vertex A of the triangle - Compute

u - If

uis a small value then the ray does not intersect - If

uis greater than1.0then the ray does not intersect - Compute

v - If

vis a small value then the ray does not intersect - If the sum of

uandvis greater than1.0then the ray does not intersect - Compute

t - If

tis a small value then the ray does not intersect - At this point

t,u,vdefine the intersection of the ray with the triangle

That algorithm actually has a bunch of steps. Before jumping into the NEON implementation, you need to understand that the NEON intrinsics used expect four floating point numbers as operands. A function call like vsubq_f32 expects two such operands. So it takes a total of 8 floating point numbers. This is actually not a function, but a wrapper around the underlying hardware instructions made possible by GCC intrinsics. Usage of it looks like this

float32x4_t c = vsubq_f32(a, b);

The type float32x4_t is used to represent four floating point values in a single variable. The computed value c is also four floating point values. So what this actually computes it

| index | value in c |

|---|---|

| 0 | b[0] - a[0] |

| 1 | b[1] - a[1] |

| 2 | b[2] - a[2] |

| 3 | b[3] - a[3] |

Each component of the output value c is computed as the difference of the same components in b and a. All of the NEON intrinsics in use work like this and operate on groups of four floating numbers or four 32-bit unsigned integers. The instructions used in the raytracing implementation are

| NEON instruction | data type | description |

|---|---|---|

| vbslq_f32 | floating point | Bitwise Select |

| vcltq_f32 | floating point | compare less than |

| vcgeq_f32 | floating point | Compare Greater than or Equal |

| vcleq_f32 | floating point | compare less than or equal |

| vmulq_f32 | floating point | multiply |

| vsubq_f32 | floating point | subtract |

| vabsq_f32 | floating point | absolute value |

| vaddq_f32 | floating point | add |

| vdupq_n_f32 | floating point | Duplicate vector element to vector or scalar |

| vorrq_u32 | unsigned integer | bitwise inclusive OR |

| vandq_u32 | unsigned integer | bitwise and |

| vmvnq_u32 | unsigned integer | bitwise not |

| vgetq_lane_u32 | unsigned integer | Move vector element to general-purpose register |

The NEON accelerated ray tracer computes four pixels per iteration. This means that instead of taking a single ray origin and ray direction, it takes four of them at a time. There is still one triangle to compute the intersection with but there are also four outputs. One noticeable difference is that because there are four computations happening concurrently the implementation cannot return early. Since four pixels are computed concurrently most any operation computes 4 results at once. For example, when pvec is computed it is the cross product of the ray direction and the second edge of the triangle. In the NEON accelerated version 4 results of pvec are computed between 4 different ray directions and the second edge of the triangle.

This is the code that Copilot generated for triangle intersection with comments added by myself

// Fixed Ray-triangle intersection (Moller-Trumbore) vectorized batch-of-4 static inline void intersect_triangle_batch(const Ray4& R, const Vec3& v0, const Vec3& v1, const Vec3& v2, const float colr, const float colg, const float colb, Hit4& out) { // compute the edges of the triangle in reference to v0 Vec3 e1 = v1 - v0; Vec3 e2 = v2 - v0; // load vectors with each of the component values of the triangle. It is composed of one vector // and two edges float32x4_t v0x = splat_f32(v0.x), v0y = splat_f32(v0.y), v0z = splat_f32(v0.z); float32x4_t e1x = splat_f32(e1.x), e1y = splat_f32(e1.y), e1z = splat_f32(e1.z); float32x4_t e2x = splat_f32(e2.x), e2y = splat_f32(e2.y), e2z = splat_f32(e2.z); // compute the cross product of each ray in R and the second edge float32x4_t hx = vsubq_f32(vmulq_f32(R.dy, e2z), vmulq_f32(R.dz, e2y)); float32x4_t hy = vsubq_f32(vmulq_f32(R.dz, e2x), vmulq_f32(R.dx, e2z)); float32x4_t hz = vsubq_f32(vmulq_f32(R.dx, e2y), vmulq_f32(R.dy, e2x)); // compute the dot product of the first edge and the result from the prior step float32x4_t a = vaddq_f32(vaddq_f32(vmulq_f32(e1x, hx), vmulq_f32(e1y, hy)), vmulq_f32(e1z, hz)); const float EPS_F = 1e-6f; // this epsilon in the code, the smallest value that can be computed before zero is reached float32x4_t EPS = splat_f32(EPS_F); float32x4_t absa = vabsq_f32(a); // get the absolute value of the dot product // check if the absolute value is less than epsilon uint32x4_t parallelMask = vcltq_f32(absa, EPS); float32x4_t f = vrecpeq_f32(a); // compute the estimated reciprocal f = vmulq_f32(f, vrecpsq_f32(a, f)); // compute the estimated square root then multiple it by the estimated reciprocal // compute the difference between each ray in R and the vertex of the triangle float32x4_t sx = vsubq_f32(R.ox, v0x); float32x4_t sy = vsubq_f32(R.oy, v0y); float32x4_t sz = vsubq_f32(R.oz, v0z); // compute the dot product of h and s then multiply by f float32x4_t u = vmulq_f32(f, vaddq_f32(vaddq_f32(vmulq_f32(sx, hx), vmulq_f32(sy, hy)), vmulq_f32(sz, hz))); uint32x4_t uLess0 = vcltq_f32(u, splat_f32(0.0f)); uint32x4_t uGreater1 = vcgtq_f32(u, splat_f32(1.0f)); // use bitwise or to combine the results. If the bit is set then u is less than zero or greater than 1 uint32x4_t uOutsideMask = vorrq_u32(uLess0, uGreater1); // compute the cross product of s and edge 1 float32x4_t qx = vsubq_f32(vmulq_f32(sy, e1z), vmulq_f32(sz, e1y)); float32x4_t qy = vsubq_f32(vmulq_f32(sz, e1x), vmulq_f32(sx, e1z)); float32x4_t qz = vsubq_f32(vmulq_f32(sx, e1y), vmulq_f32(sy, e1x)); // compute the dot product of each vector in R and q, then multiply by f float32x4_t v = vmulq_f32(f, vaddq_f32(vaddq_f32(vmulq_f32(R.dx, qx), vmulq_f32(R.dy, qy)), vmulq_f32(R.dz, qz))); uint32x4_t vLess0 = vcltq_f32(v, splat_f32(0.0f)); uint32x4_t vGreater1 = vcgtq_f32(v, splat_f32(1.0f)); // use bit wise or to combine the results. If the bit is set then v is less than zero or greater than 1 uint32x4_t vOutsideMask = vorrq_u32(vLess0, vGreater1); // check if the sum of u and v is greater than 1 float32x4_t uplusv = vaddq_f32(u, v); uint32x4_t uvGreater1 = vcgtq_f32(uplusv, splat_f32(1.0f)); // computation of t float32x4_t t = vmulq_f32(f, vaddq_f32(vaddq_f32(vmulq_f32(e2x, qx), vmulq_f32(e2y, qy)), vmulq_f32(e2z, qz))); // bounds check of t against the values in R uint32x4_t tGeMin = vcgeq_f32(t, R.tmin); uint32x4_t tLeMax = vcleq_f32(t, R.tmax); // compute the bitwise not of the parallel mask uint32x4_t valid = vmvnq_u32(parallelMask); // use bitwise and to combine each check together // for the out of bounds checks vmvnq_u32 is applied first to invert the result valid = vandq_u32(valid, vmvnq_u32(uOutsideMask)); valid = vandq_u32(valid, vmvnq_u32(vOutsideMask)); valid = vandq_u32(valid, vmvnq_u32(uvGreater1)); valid = vandq_u32(valid, tGeMin); valid = vandq_u32(valid, tLeMax); uint32x4_t nearer = vcltq_f32(t, out.t); uint32x4_t result = vandq_u32(valid, nearer); // if any of the pixels have a hit copy into the output hit4 vector if (vgetq_lane_u32(result,0) || vgetq_lane_u32(result,1) || vgetq_lane_u32(result,2) || vgetq_lane_u32(result,3)) { out.t = vbslq_f32(result, t, out.t); Vec3 n = normalize(cross(e1,e2)); out.nx = vbslq_f32(result, splat_f32(n.x), out.nx); out.ny = vbslq_f32(result, splat_f32(n.y), out.ny); out.nz = vbslq_f32(result, splat_f32(n.z), out.nz); out.rr = vbslq_f32(result, splat_f32(colr), out.rr); out.rg = vbslq_f32(result, splat_f32(colg), out.rg); out.rb = vbslq_f32(result, splat_f32(colb), out.rb); out.hit = vorrq_u32(out.hit, result); } }

This implementation uses almost pure NEON intrinsics. The function splat_f32 is a wrapper around the vdupq_n_f32 intrinsic which fills a vector with the same floating point value. It's really odd that Copilot generated this as it doesn't save any space. The actual function implementation is this

static inline float32x4_t splat_f32(float v){ return vdupq_n_f32(v); }

The reason why some declarations are very repetitive like float32x4_t hx = vsubq_f32(vmulq_f32(R.dy, e2z), vmulq_f32(R.dz, e2y)); is that Copilot choose to generate unrolled implementations of vector mathematical functions like the cross product and the dot product. I'm also fairly certain there are a few things that could be changed to optimize the implementation, but I don't intend to go down that path right now. Overall I think there are several excellent points to call out with the generated code

- It makes effective use of NEON vector instructions to process 4 pixels at a time

- Implementation of common vector mathematics like the cross product are laid out in the standard representation

- Variable naming is consistent and represents the value computed

Evaluating of the utility of Copilot

The program I generated with Copilot is interesting enough to be more than a novelty. But what I am more interested in is trying to evaluate the actual usefulness of Copilot as an aid in software engineering.

What using Copilot reminds me of

In the mid 90s a search engine named AltaVista was created. AltaVista was at the time cutting edge, allowing users to quickly find content on the then quickly expanding Internet. Google search became widely available in the late 1990s and gradually gained popularity until it became the dominant search engine. The early functionality of Google Search was actually much more useful than the current version. Google search still supports a powerful syntax but at some point the utility of Google search started to degrade and has never really recovered. I think part of this is Google's push to put things in front of users that generate revenue like product links. Another source of this is personally tailored search results based on your internet activity. As it turns out, showing someone using search more of what they've already been engaging with probably is not very useful. The primary reason to use search would be to gain access to information you have not previously been exposed to. But enough of this digression, let me skip to the point I want to make.

In the first decade or so of Google search it had good enough functionality and enough indexed pages that you could start searching for something and then review the first few pages of results and determine if you were getting anything remotely close to what you wanted to know about. By taking terms from those results and either adding them to the search terms or having google specifically exclude results with those terms you could narrow down your focus. This process could take a while and be a bit laborious but was overall worth it. Somewhere the content you wanted exists, you just need to filter out the content you don't want somehow. I don't really consider this practical in the modern incarnation of Google search. You can still use Google search to find information but getting a specific & narrow set of results isn't possible any longer.

This process of making a query, reviewing the output & updating the original query is not significantly different than what I used with Copilot. When Copilot generated section of code that I found useful I can include that in my next query to make sure it is always there. When Copilot decided to use obsolete APIs I can tell it not to do so. The process of utilizing the output from one query to determine the form of the next query is just a very basic feedback loop.

So the killer features of LLMs and specifically Copilot at present can be summarized into the following points:

- Copilot doesn't have any ads at present

- Copilot is good at maintaining at effective feedback loop. When the results of one query become part of the next query, Copilot is able to return meaningful results

- Copilot is able to approximate the semantic meaning of different sources closely and generate a single output product

I think point one speaks for itself, if someone puts a bunch of ads in Copilot it won't be nearly as useful.

The second point I am making is just that the feedback loop I used with early search engines seems to work here with Copilot. This is partly because Copilot seems to be a well-designed product and secondarily just because the overall utility of products like Google Search has declined. Since I use the output of one query result to determine the next one, I suppose you can make an argument that there exists some element of Cybernetics here.

The third point here is probably the most interesting new development. Before the advent of LLMs in this decade there have never been any products that can combine content from multiple sources into something useful. I hesitate here to use the word "understand" because in my opinion the concepts of understanding, cognition, and consciousness still aren't universally agreed upon. What I think can be agreed upon here is that an alignment of the semantic meaning of both my prompts to Copilot and the underlying source material used to produce the output. It doesn't matter if this is understanding or not, the output is useful. The important thing to establish here is that if I wanted to find information about software ray tracing, there is no shortage of that on the internet. Finding a website that shows example usage of ray tracing, NEON intrinsics, the Bullet physics engine, SDL & all other things I wanted is significantly less likely. Copilot is apparently able to use distinct pieces of source material to assemble a single working product.

Can Copilot substitute for actual knowledge?

In this experiment I generated 634 lines of code which make up a useful, working program. Superficially it seems like I just had to prompt Copilot and compile the resulting code. But in order to do this I had to do the following

- Apply the general feedback loop described above to gradually refine Copilot's output

- Manually inspect code to find errors within it

- Apply domain specific knowledge, in this case vector calculus

- Compile and link 3rd party libraries with the source code to make an executable program

The question I pose to myself is: Is Copilot useful without over a decade of experience in software engineering? Even the process of refining the prompt relies extensively on specialized knowledge from the domain. For example, being able to identify we obsolete APIs are being used and request them not be used. I also removed a call to SDL_Delay(8) since I know it is useless.

In the case of finding the bug with the controls being inverted, I'm not even sure that having experience in software engineering is enough. You need to have an understanding of vector calculus to know how to correct that.

I completely omitted in my writeup here anything about compiling and linking 3rd party libraries. It isn't very interesting. But it is still mandatory to produce an executable. Copilot can help here but things like a build system are generally highly specialized to the specific needs of an application.

Without these skills to fall back onto, I don't really see Copilot as a very useful code generation tool. It absolutely will generate code that can be compiled and run. But accomplishing the remaining 10% of refinement needed likely takes the skills of a software engineer anyways.